MINT 2010

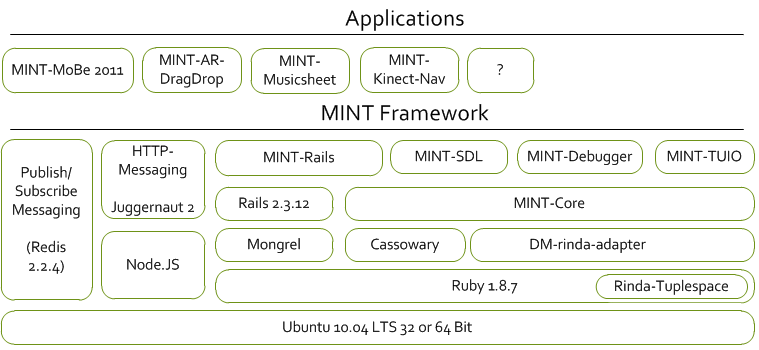

The MINT 2010 architecture and components reflect that state of the implementation of the MINT framework in the years 2010-2011. All articles published in these two years refer to this version that has been published as open source. We are currently rewriting some core parts of the framework and will publish the next release as soon as we have first publications accepted.

Ecosystem

Click on one of the component boxes to learn more about the actual state of implementation.

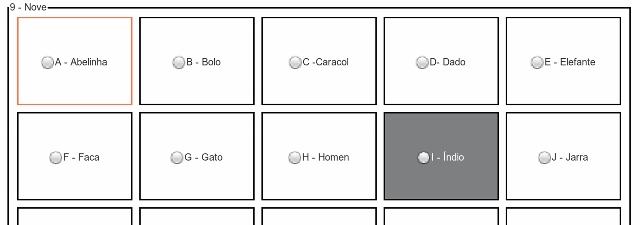

Model-based Design of Gesture-based Interface Navigation

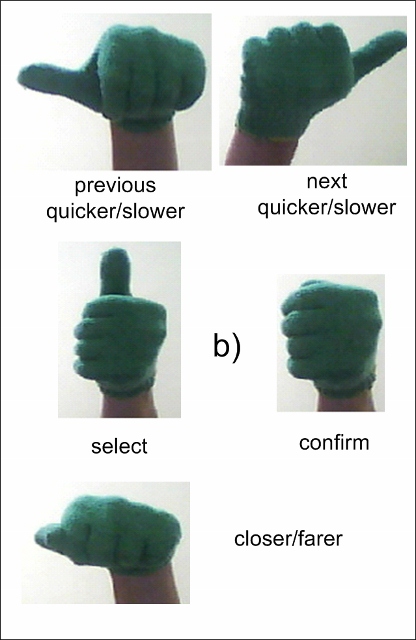

This prototype implements three different model-based gesture navigation control designs based on state charts and demonstrates that these models can be directly executed. We used this prototype for comparing the navigation performance in a use test. The prototype requires the glove-based recognition component.

The source code is available at GitHub: here .

This prototype was first presented at the MoBe 2011 workshop about model-driven development of user interfaces in Berlin, Germany, October 6th, 2011.

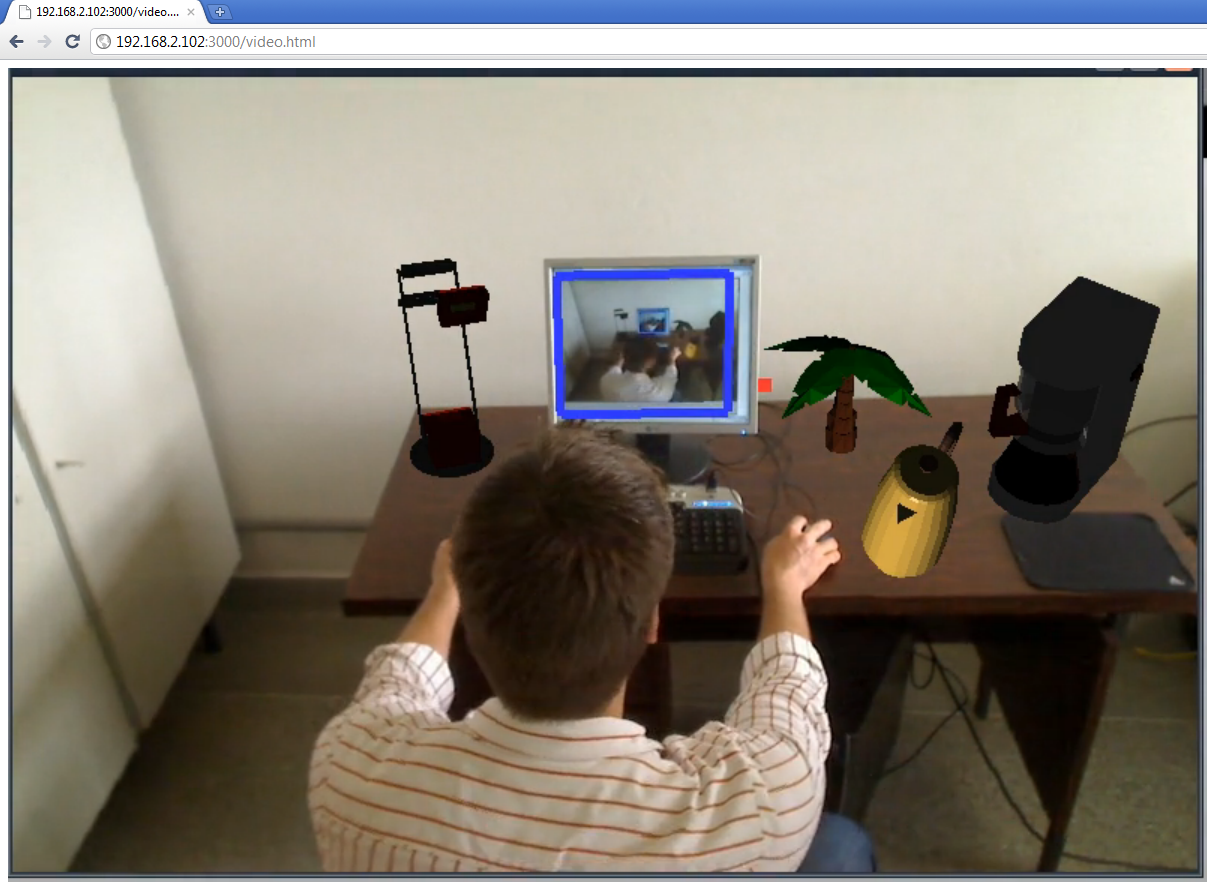

The Augmented Drag-and-Drop

This prototype implements a web furniture shop that supports arranging furniture in an augmented reality to prove our approach. It demonstrates modeling a reality spanning Drag-and-Drop interaction between a 2D browser and an AR scene. Currently it supports dragging furniture with two modes: by using a mouse or by using gestures with two different colored gloves. One hand is used for pointing and the other one for selecting and dragging the objects.

The source code is available at GitHub: here .

This prototype was first presented at the Symposium on Virtual and Augmented Reality in Uberlândia, Brazil, May 23-26/2011.

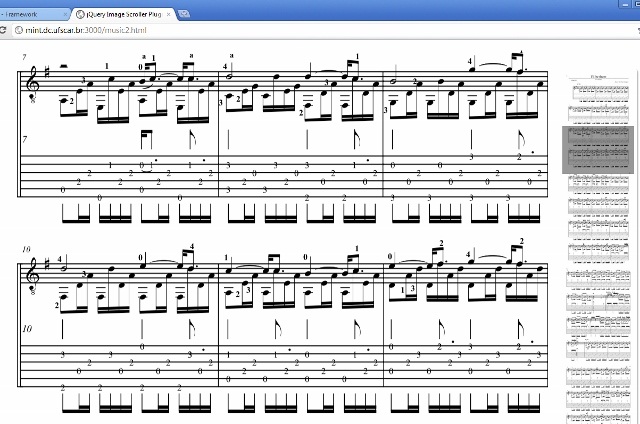

Turn the Sheets with Your Head

During plays, music sheets are used as a guide to perform a musical piece. However, since songs may span across several sheets, an extra amount of coordination is necessary to turn pages without disrupting the play. Therefore

musicians are required to learn the music by hearth.

This prototype allows controlling an interface just by using head movements and has been implemented using a model-based interface design. It supports a musician to turn music sheets just by head movements.

The source code will be available at GitHub soon.

Gesture processing with the Kinect

We are currently working on an improved gesture recognition based on the Microsoft Kinect controller.

As soon as we have first results published you will find the source code published on github.

Stay tuned…

We are continously evolving the framework and intend to add further examples and prototypes that demonstrate the capabilities of the MINT platforms soon….

Redis Data Structure Server

Redis is an open source, advanced key-value store. It is often referred to as a data structure server since keys can contain strings, hashes, lists, sets and sorted sets.

Redis is an open source, advanced key-value store. It is often referred to as a data structure server since keys can contain strings, hashes, lists, sets and sorted sets.

We are currently using the Subscribe/Notify Mechanisms of Redis as the communication channel to synchronize the different modes and media of a multimodal application. The MINT platform requires version 2.2.4. (Direct Download

For further information about Redis please check the official redis homepage .

Juggernaut 2

Juggernaut enables a realtime connection between a server and client web browsers. It can literally push data to clients using from inside a web application, which eases to implement awesome things like multiplayer gaming, chat, group collaboration and more. Juggernaut is built on top of Node.js.

The source code is available at GitHub: here

Node.js version 0.2.4

Node.js is an event-driven I/O server-side JavaScript environment based on V8. It is intended for writing scalable network programs such as web servers. It was created by Ryan Dahl in 2009

Node.js is an event-driven I/O server-side JavaScript environment based on V8. It is intended for writing scalable network programs such as web servers. It was created by Ryan Dahl in 2009

We are require Node.js as the backend for Juggernaut. Currently only version 0.2.4 seems to work well with juggernaut 2 – newer versions have problems.

The version 0.2.4 can be downloaded from the official node.js homepage: Direct download .

Please see the official website for further information: http://nodejs.org

MINT-rails

This is the basic rails extension that you have to add to a new rails project to use the MINT framework.

Please see the installation instructions for the MINT-core module on github that describe in detail how

to setup a new MINT-rails project.

The source code for MINT-rails is available at GitHub: here

MINT-SDL

A basic audio interaction resouce implementation based on the Simple Directmedia Layer Library This project demonstrates a way to add sound fx support for you INT applications.

The source code is available at GitHub: here

Rails 2.3.12

Rails is a web application development framework written in the Ruby language. It is designed to make programming web applications easier by making assumptions about what every developer needs to get started. It allows you to write less code while accomplishing more than many other languages and frameworks. Experienced Rails developers also report that it makes web application development more fun.

Rails is a web application development framework written in the Ruby language. It is designed to make programming web applications easier by making assumptions about what every developer needs to get started. It allows you to write less code while accomplishing more than many other languages and frameworks. Experienced Rails developers also report that it makes web application development more fun.

The MINT framework is currently only compatible with the old 2.3.x version series of Rails. The reason for this is that we are currently relying on the embedded-actions plugin that has not yet been ported to rails 3.

The rails project homepage is here

MINT-core

The MINT core gem contains all basic AUI and CUI models as well as the basic infrastructure to create interactors and mappings. Please note that you need at least a CUI adapter gem to be able to actually run a system, e.g. the MINT-rails gem. But for initial experiements ist enough to follow the installation instructions of this document.

There is still no documentation for the framework, but a lot of articles about the concepts and theories of our approach have already been published and can be accessed from our project site .

Detailed installation instructions and source code are available at GitHub: here

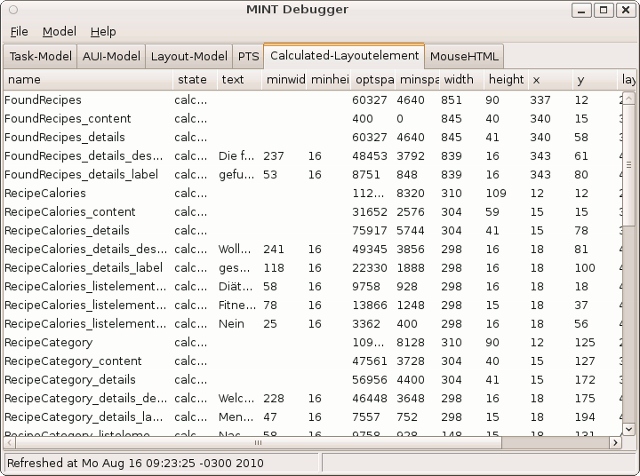

MINT-Debugger

The Multimodal Interaction Framwork debugger enables model inspection at system run-time.

The source code is available at GitHub: here

MINT-TUIO

We are currently working on added multi-touch support for the MINT platform based on the TUIO protocol .

As soon as the implementation is finished you will find the source code published at github.

Mongrel

Mongrel is an open-source HTTP library and web server written in Ruby by Zed Shaw. It is used to run Ruby web applications and presents a standard HTTP interface. This makes layering other servers in front of it possible using a web proxy, a load balancer, or a combination of both, instead of having to use more conventional methods employed to run scripts such as FastCGI or SCGI to communicate. This is made possible by integrating a custom high-performance HTTP request parser implemented using Ragel.

The source code is available here

Cassowary Ruby Interface and Ubuntu 10.04 LTS Debian packages

Cassowary is an incremental constraint solving toolkit that efficiently solves systems of linear equalities and inequalities. Constraints may be either requirements or preferences. Client code specifies the constraints to be maintained and the solver updates the constrained variables to have values that satisfy the constraints. The cassowary constraint solver is copyrighted by Greg J. Badros and Alan Borning and has been released under GPL.

Cassowary is an incremental constraint solving toolkit that efficiently solves systems of linear equalities and inequalities. Constraints may be either requirements or preferences. Client code specifies the constraints to be maintained and the solver updates the constrained variables to have values that satisfy the constraints. The cassowary constraint solver is copyrighted by Greg J. Badros and Alan Borning and has been released under GPL.

The official website can be found here

We implemented a Ruby gem that makes the cassowary C interface usable in Ruby. Further on, we packaged the native c library for Ubuntu 10.04 and 12.04 LTS. Installation instructions can be found here: http://github.com/sfeu/cassowary .

Datamapper – Rinda Adapter

DataMapper is a Object Relational Mapper written in Ruby, originally developed by Dan Kubb.

DataMapper is a Object Relational Mapper written in Ruby, originally developed by Dan Kubb.

We implemented a Datamapper adapter that enables to store data in a Rinda tuplespace. Additionally a subscribe/notification functionality that can be accessed via Datamapper.

The official site is http://datamapper.org

The Rinda Adapter is available at http://github.com/sfeu/dm-rinda-adapter under the GPLv3 licence restrictions.

Ruby Language version 1.8

Ruby is a dynamic, open source programming language with a focus on simplicity and productivity. It has an elegant syntax that is natural to read and easy to write. Several popular we frameworks such as Ruby on Rails have been implemented with ruby. Most of our software is based on ruby 1.8. We currently do not support newer versions of ruby (1.9.x), because they are often mentioned as slower than the version 1.8. Further on, newer versions changed significantly the thread handling, which has impact on our implementations. Ruby version 1.8 is bundled with Ubuntu and can be installed out of the box.

Ruby is a dynamic, open source programming language with a focus on simplicity and productivity. It has an elegant syntax that is natural to read and easy to write. Several popular we frameworks such as Ruby on Rails have been implemented with ruby. Most of our software is based on ruby 1.8. We currently do not support newer versions of ruby (1.9.x), because they are often mentioned as slower than the version 1.8. Further on, newer versions changed significantly the thread handling, which has impact on our implementations. Ruby version 1.8 is bundled with Ubuntu and can be installed out of the box.

See the official Ruby homepage for further information.

Ubuntu 10.04 LTS

Ubuntu is a fast, secure and easy-to-use operating system used by millions of people around the world. Most parts of our software are based on Linux and the binary versions that we offer are prepared to run on the Long-term-supported (LTS) Ubuntu Linux 10.04.x for 64bit and 32bit.

Ubuntu is a fast, secure and easy-to-use operating system used by millions of people around the world. Most parts of our software are based on Linux and the binary versions that we offer are prepared to run on the Long-term-supported (LTS) Ubuntu Linux 10.04.x for 64bit and 32bit.

Please note that we do not support newer versions of Ubuntu since the 10.04 version figured out to run very stable compared to more recent versions. The LTS version will be supported from Ubuntu at least till 2015 .

You can download the Ubuntu 10.04.x installation CD from the official Ubuntu release download page" .

Further information about Ubuntu can be found on the official Ubuntu homepage

Abstract Interactor Model (AIM)

The Abstract Interactor Model (AIM) describes the media and mode independent part of the user interface interactors and therefore the overall interaction controls and views. At a concrete, mode or media specific interactor model the AIM interactors are detailed to include specific features. These features reflect the individual characteristics of a certain mode or media and enable enhanced accessibility and-or comfort in control or representation of the interface. The concrete interator model features are not relevant for the functional backend whereas the AIM interactors are limited to include all relevant data relevant for the functional backend.

The current AIM specification can be found here .

Concrete Interactor Model (CIM)

For each media or mode a Concrete Interactor Model (AIM) is used to describe its specific interaction capabilities beyond the basic ones that already are defined in the AIM. To each AIM interactor one or more concrete interactors can be associated for a specific mode or media. We are currently focusing on describing the concrete graphical HTML interface model.

The graphical HTML concrete interactor model will be published soon.

Interaction Resources (IR)

Interaction Resources are used to capture the capabilities of concrete devices implementing a certain mode to the user interfaces

The current Interaction Resource Model(IRM) specification can be found here .

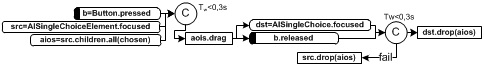

Multimodal Interactor Mapping Model (MIM)

Multimodal mappings are the glue to combine the AUI, CUI and interaction resource interactios. Thus, they are used to describe an interaction (step) that can involve several modes and media in a multimodal manner. Further on the mappings can be specified on an abstract level. In this case overall interaction paradigms like e.g. a drag-and-drop can be specified.

The MIM specification can be found here .

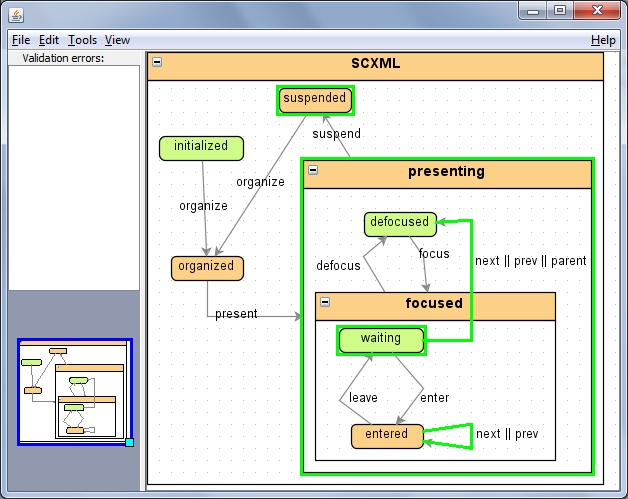

State Chart XML (SCXML) Editor – scxmlgui

Interactors can be designed and manipulated with any SCXML editor. We are using the freely available scxmlgui editor .

The project page of the scxmlgui editor can be found here .

Google Chrome Browser

So far, all multimodal interfaces with the MINT framework are offered as web applications. Since we rely on some of the latest HTML standards such as HTML5, CSS3 and WebSockets we recommend using the latest Google Chrome Browser that can be found here .

Developer-centric approach

Designing and implementing multimodal interfaces for the web is still a complex task and so far the targeted audience of our approach are developers that are familiar with current web technologies like HTML5, CSS3, Javascript, the ruby programming language as well as start chart modelling. Multimodal interfaces are still subject of basic research and currently are only used in specific niches, such as in-car navigation systems or airplane cockpits for instance.

Even though we hope that in the future multimodal interfaces could be created by people without comprehensive programming skills, such as interaction designers, we are still focusing our approach on discovering the opportunities for multimodal web interfaces and follow a very technical approach.

The User

We are publishing all our example applications and multimodal control modes that we implement as part of our research projects as open source for others to experiment with our results. You are invited to download our example applications, such as the glove-based gesture recognition or the interactive music sheet that can be controlled by head movements to change and extend them as you like.

We are very interested in your feedback! But please remember that we are not focus on creating products. Instead we are doing basic research about multimodal interfaces and just implement prototypes that should demonstrate specific features. Since we are few people we can’t effort any further work that is required to use these applications on a daily basis. But if your are interested in extending our using our work you are very welcome to do so. We will try to give you all support you need.

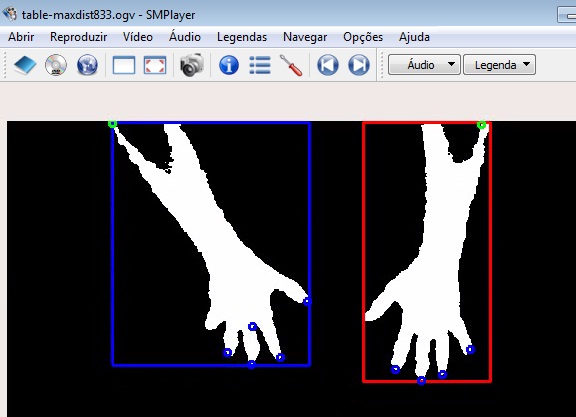

Gloves-based gesture and posture recognition

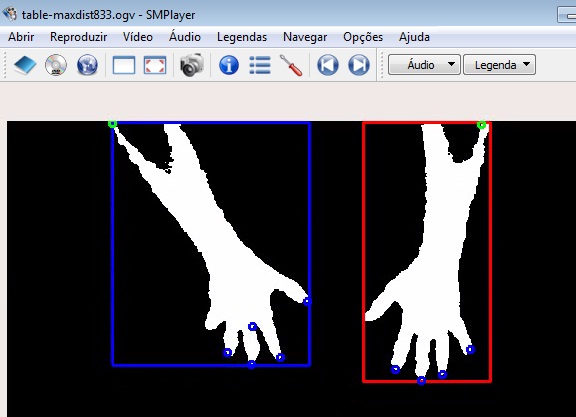

Based on an earlier project of finger spelling recognition of sign language we implemented a system that is able to recognize gestures using coloured gloves by doing segmentation based on the HSV colour space. We use images of 25×25 pixels and trained an artificial neural network Multi-Layer Perceptron (MLP) with an architecture of 625×100×4 neurons in each layer. This network is able to classify five different postures that we used in some of our research papers to control web applications.

The Glove-based gesture recognition is available on request for Windows. Since we do not have the time to clean up the source and had some problems running the software on newer windows versions

we are not willing to publish it on github. But if you are interested in using or improving it, please send us an email and we will send you the recognizer that can be used e.g. together with the MINT-MoBe2011 project.

Head-movement recognition

![]()

We are currently working on a face recognition and head tracking component. We use the Viola-Jones object detection framework for the recognition and the Camshift object tracking algorithm for tracking the head since we required a tracking at constant time and can be applied to track heads of arbitrary persons without the need for training.

As soon as we have published first results we will offer the head tracking component as open source.

Accord.NET

The Accord.NET Framework is a C# framework extending the excellent AForge.NET Framework with new tools and libraries. Accord.NET provides many algorithms for many topics in mathematics, statistics, machine learning, artificial intelligence and computer vision. It includes several methods for statistical analysis, such as Principal Component Analysis, Linear Discriminant Analysis, Partial Least Squares, Kernel Principal Component Analysis, Kernel Discriminant Analysis, Logistic and Linear Regressions and Receiver-Operating Curves. It also includes machine learning topics such as (Kernel) Support Vector Machines, Bayesian regularization for Neural Network training, RANSAC, K-Means, Gaussian Mixture Models and Discrete and Continuous Hidden Markov Models. The imaging and computer vision libraries includes projective image blending, homography estimation, the Camshift object tracker and the Viola-Jones object detector.

The Accord.NET project homepage can be found here: http://accord-net.origo.ethz.ch/ .

Microsoft Windows

Unfortunately some multimodal components, like the glove-based gesture detection or the head tracker require Microsoft Windows. We are currently porting the glove-based

posture and gesture recognition to Ubuntu Linux since the rest of the framework has only be tested with Linux so far.

New gesture and posture recognition component

We are currently working on a new version of our gesture and posture recognition software that will work under Linux and supports both, gloves as well as a recognition without

colored gloves driven by the Kinect controller.

As soon as we have published first results we will offer the head tracking component as open source.

Ubuntu 10.04 LTS

Ubuntu is a fast, secure and easy-to-use operating system used by millions of people around the world. Most parts of our software are based on Linux and the binary versions that we offer are prepared to run on the Long-term-supported (LTS) Ubuntu Linux 10.04.x for 64bit and 32bit.

Ubuntu is a fast, secure and easy-to-use operating system used by millions of people around the world. Most parts of our software are based on Linux and the binary versions that we offer are prepared to run on the Long-term-supported (LTS) Ubuntu Linux 10.04.x for 64bit and 32bit.

Please note that we do not support newer versions of Ubuntu since the 10.04 version figured out to run very stable compared to more recent versions. The LTS version will be supported from Ubuntu at least till 2015 .

You can download the Ubuntu 10.04.x installation CD from the official Ubuntu release download page" .

Further information about Ubuntu can be found on the official Ubuntu homepage