Multimodal Interactor Mapping Model Specification

W3C Working Group Submission February 3rd 2012

- This version

- http://www.multi-access.de/mint/mim/2012/20120203/

- Latest version

- http://www.multi-access.de/mint/mim/

- Previous version

- none

- Editors

- Sebastian Feuerstack, UFSCar

This document is available under the Document License. See the Intellectual Rights Notice and Legal Disclaimers for additional information.

Table of Contents |

Overview

Multimodal mappings are the glue to combine the AUI, CUI and interaction resource interactios. Thus, they are used to describe an interaction (step) that can involve several modes and media in a multimodal manner. Further on the mappings can be specified on an abstract level. In this case overall interaction paradigms like e.g. a drag-and-drop can be specified.

Mappings rely on the features of the state charts that can receive and process events and have an observable state. Thus, each mapping can observe state changes and trigger events.

This specification distinguishes between synchronization and multimodal mappings. The former ones synchronize the interactor’s state machines among different models of abstractions, like between task and AUI or AUI and CUI interactors. The latter ones are used to synchronize modes with media.

Multimodal Mappings

Multimodal mappings can be pre-defined(e.g. to support a certain form of interaction with a particular device or to implement an interaction paradigm like drag-and-drop) but are usually designed during application design (e.g. stating that a security critical command must be confirmed with a mouse click and a voice command).

There are three basic concepts that each multimodal mappings consists of: Observations, Actions, and Operators. They are described in the following sections.

Observations

Observations are used to observe state charts (state machines) for state changes and are defined by boxes with round edges. A set of observations can be defined vertically. For efficiency reasons, one (only one) observation can be marked to be checked first. This observations is indicated by a black stripe at the left end of the observation box. The text in each observation box is formatted by using dots to refer to a set, a type class or individual interactors (type and/or instance).

Observation reference text examples:

waits for an interactor that is derived from the AIO interactor class to enter state "presenting"

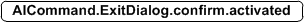

waits for the confirm command of the exit dialog of an application to be activated

Actions

Actions are used to trigger state changes by sending events to start charts or to call functions in the backend. Actions are defined by boxes with sharp edges and are defined vertically like observations. There is currently one special action - "all" - that can be used to define a function about all interactors of a class.

Action examples:

- sends a focus event to an AIO interactor.

- sends a focus event to an AIO interactor.

- sends an unfocus event to all AIO interactors.

- sends an unfocus event to all AIO interactors.

- calls a custom function that is implemented inside the interactor.

- calls a custom function that is implemented inside the interactor.

Operators

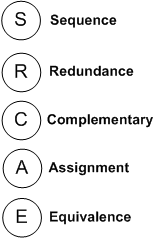

Operators specify multimodal relations and link a set of observations to a set of actions. A cycle with the initial capital letter is used to specify an operator.

There are six operators available: Complementary (C), Assignment (A), Redundancy (R), Equivalence (E), Sequence (S), and Timeout (T).

The first four (the CARE properties) have been defined earlier as part of the TYCOON framework [1] and have been detailed to describe relationships between modalities and applied to tasks, interaction languages and devices in [2].

Equivalence describes a combination of modalities in that all can be used to lead to the same desired meaning, but only one modality can be used at a time. Thus, a text input prompt can be for instance either handled by a spoken response or by using a written text typed by a keyboard.

By assignment a particular modality is defined to be the only one that can be used to lead to the desired meaning. (e.g. a car can only be controlled by a steering wheel). Modalities can be used redundant if they can be used individual and simultaneously to express the desired meaning. Hence, for instance speech and direct manipulation are used redundantly if the user articulates “show all flights to São Paulo” while pressing on the Button “São Paulo” with the mouse.

In a complementary usage of modalities, multiple complementary modalities are required to capture the desired meaning. For instance a “put that there” command requires both speech and pointing gestures in order to grasp the desired meaning.

Each operator can optionally state a Temporal Window (Tw), which is specified on top of the operator cycle as well as an exit condition with specified by an arrow under the operator cycle to state a set of action to happen if the multimodal relation could not be fulfilled.

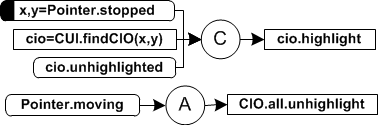

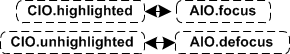

Basic Example: Highlighting on pointing

The figure above depicts an exemplary multimodal mapping specifying to change the highlighted graphical UI elements based on the current pointing position. Boxes with rounded edges stand for “observations” of state changes. Boxes with sharp edges are used to define backend function calls or the triggering of events. The mapping observes the state of the pointer interactor and gets triggered as soon as the pointer enters the state “stopped”. The “C” specifies a complementary relation, which requires all inputs of the C to be resolved for the mapping to get triggered. Therefore, as soon as the pointer has been stopped and coordinates of the stopped pointer could be retrieved, the findCIO function is called to check if there has been a CUI positioned on the coordinates, and if it is currently not highlighted. The complementary mapping is executed only if all 3 conditions can be evaluated. When that happens, then a highlight event is fired to the CIO.

Synchronization Mappings

Synchronization mappings are pre-defined together with the interactors and are automatically considered when the designer assembles a user interface by using these interactors.

Examplary Mappings

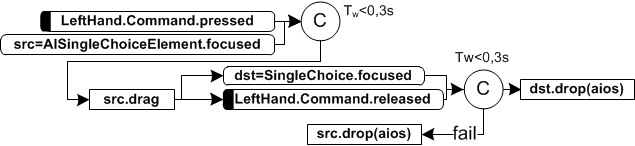

Drag and Drop

Common interaction paradigms can be specified by combining basic interactors with multimodal mappings.

The figure above depicts a mapping that specifies the drag-and-drop functionality for elements (AIChoiceElement) of an abstract list (AIChoice) on the AIM model. The mapping gets triggered if (a) the mouse button is pressed (b) while a list (AIChoose) is “focused” (e.g. the mouse pointer is currently positioned over this list) and (c) at least one entry of the list is “chosen”).

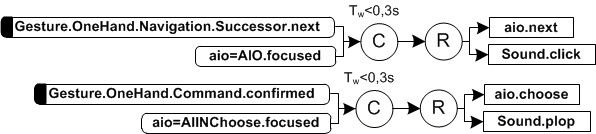

To define how the interface should react on the gestures we defined two main multimodal mappings that are depicted in the following figure.

The first one implements the navigation to the next element of the interfaces and plays a “tick” sound on each successful next movement. In this exemplary mappings the complementary operator, (C), states observations that need to happen in a certain temporary windows (Tw) and the redundancy operator, (R), publishes information to different media (such as changing to the next element and playing a “click” Sound at the same time. The Second mapping shows a mapping that implements a selection of an element of a list that is in the current focus of the user.

[1] Jean-Claude Martin: TYCOON: Theoretical Framework and Software Tools for Multimodal Interfaces; Intelligence and Multimodality in Multimedia interfaces, AAAI Press; 1998.

[2] Laurence Nigay und Joelle Coutaz: Multifeature Systems: The CARE Properties and Their Impact on Software Design; in Intelligence and Multimodality in Multimedia Interfaces; 1997.